Clinc

•

UX Research Case Study

Streamlining the Conversational AI Creation Experience

Overview

The primary objective of this project was to streamline the conversational AI creation process, making it more accessible and user-friendly for clients across various industries and backgrounds. Our efforts focused on enhancing the platform's usability by simplifying the AI training process. This approach ensured that even non-technical users could efficiently build and deploy AI-driven chatbots. By reducing complexity and improving the overall user experience, we aimed to empower clients to leverage conversational AI technology effectively, regardless of their technical background.

Navigating the Complexity of Conversational AI Creation

Challenges

User Experience Hurdles

Complexity for Non-Technical Users: Many users, especially those without a technical background, found the process of creating and customizing AI chatbots on our platform to be daunting and non-intuitive. This complexity hindered their ability to leverage the platform's full potential.

Navigation and Interface Issues: The platform's navigation and interface design were not user-friendly, causing confusion and frustration among users trying to accomplish basic tasks.

Inefficiencies in Workflow

Repetitive and Time-Consuming Tasks: The existing workflow for building and training chatbots was not streamlined, often requiring repetitive and time-consuming tasks. This inefficiency led to prolonged development times and a steep learning curve for new users.

Lack of Automation and Guidance: Users were often left to figure out the process on their own, lacking automated tools and guidance that could help them navigate the AI training process more efficiently.

Focus

Conduct Comprehensive UX Research

Perform user interviews, card sorting tests, competitive analysis, and usability testing to identify and understand the usability challenges in the current AI creation process.

Develop a More Intuitive User Flow

Create a simplified and more intuitive user flow that allows clients to easily build and customize their AI chatbots. Focus on reducing the number of steps and making the interface more user-friendly.

Implement a Guided AI Training Module

Design and develop a guided AI training module that assists users in training their chatbots with industry-specific data. This module should include step-by-step instructions, automated suggestions, and support to ensure users can effectively train their chatbots.

Test the New Interface and Training Module

Conduct thorough testing of the new interface and training module both internally and with clients from various industries. Gather feedback to ensure the new design is widely applicable, easy to use, and meets the diverse needs of all users.

By addressing these challenges and focusing on these areas, this initiative aimed to create a more user-friendly and efficient platform for building and deploying AI-driven chatbots, ultimately empowering clients to utilize AI technology more effectively.

Unearthing Insights for Smarter Conversations

Research Overview

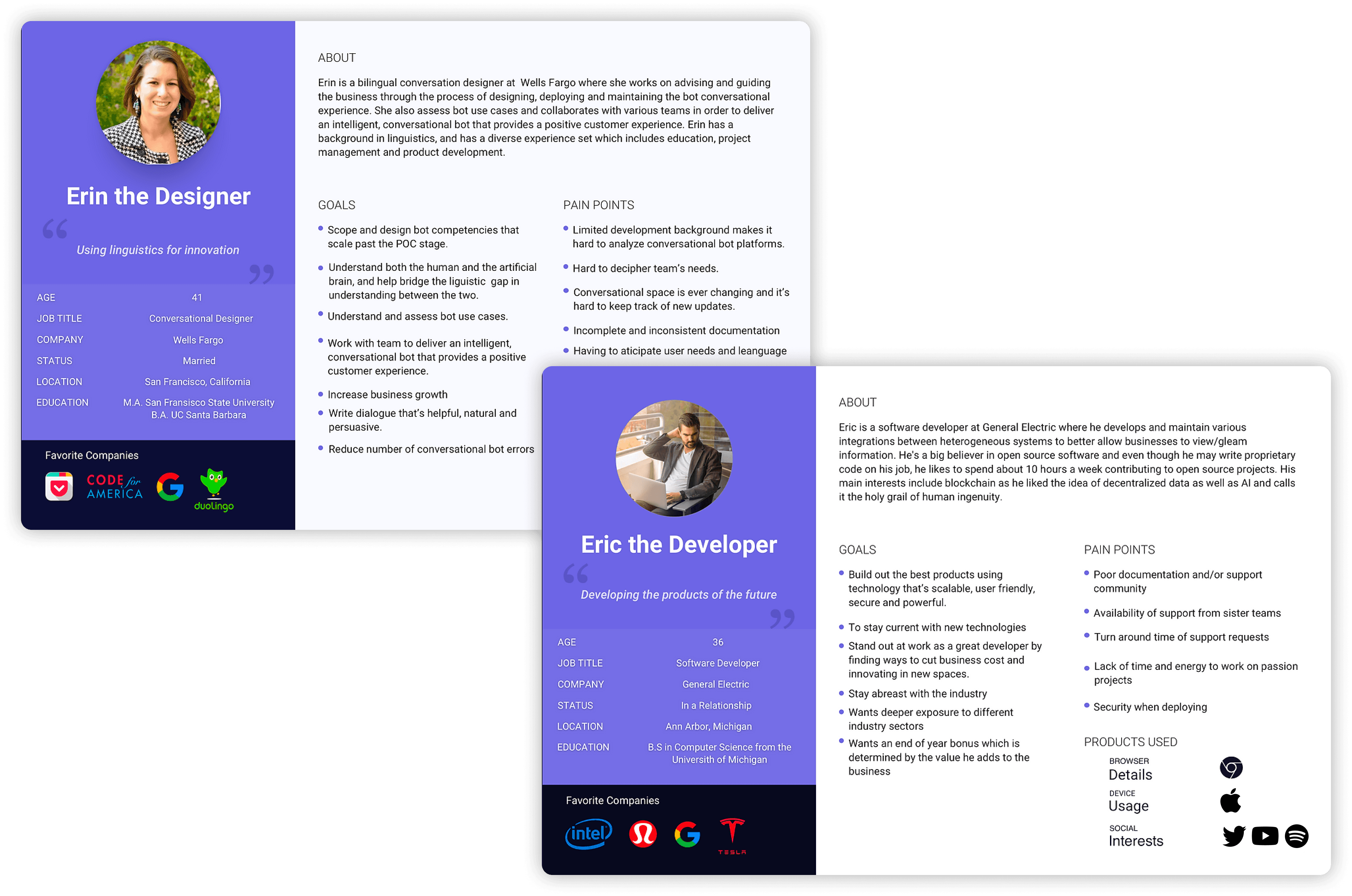

Our research aimed to delve into the heart of the challenges faced by our users, uncovering actionable insights to drive our platform's evolution. This multi-dimensional research approach was designed to capture a holistic view of user needs, preferences, and pain points.

💬 User Interviews

Objective: To understand the pain points and needs of users when creating and customizing AI chatbots on the platform.

Methodology:

Participants: 8 users from various industries, including finance/banking, healthcare, and gaming.

Interview Process: Semi-structured interviews conducted via video calls, each lasting approximately 45 minutes.

Key Findings:

Complexity in Initial Setup: 70% of participants found the initial setup process confusing and time-consuming.

Lack of Guidance: 60% of users felt that the platform lacked sufficient guidance and tutorials.

Customization Difficulties: 50% of users reported difficulty in customizing chatbots to meet their specific needs without technical support.

Positive Feedback: 80% appreciated the flexibility of the platform once they became familiar with it.

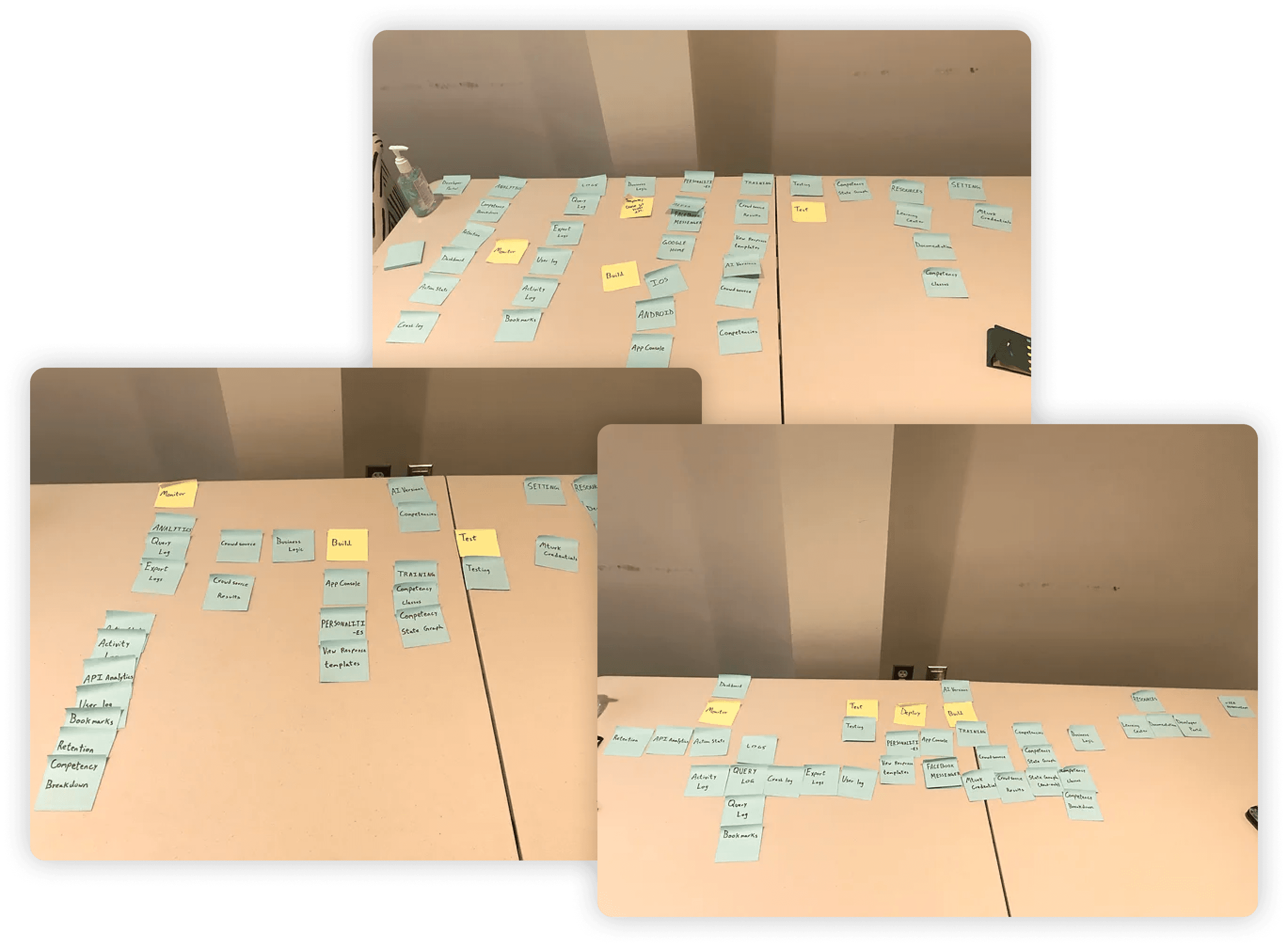

🗂️ Card Sorting

Objective: To improve the information architecture of the platform by understanding how users categorize different functionalities.

Methodology:

Participants: 16 internal engineers from the Core Platform team.

Type of Card Sorting: Open card sorting conducted in person with sticky notes.

Process: Participants were given 30+ cards, each representing a different feature or page on the platform. They were asked to group the cards into categories that made sense to them and label each category.

Key Findings:

Common Categories Identified

Setup and Configuration: Create new chatbot, import data, setup flow state.

Training and Data Input: Train AI, import data, backup data, restore data.

Customization and Personalization: Customize responses, manage templates, configure notifications.

Deployment and Monitoring: Deploy & API configuration, Monitor chatbot activity, view analytics, view reports.

Support and Documentation: Access documentation, customer support, access tutorials.

Confusion Points

AI Training and Data Input: Participants had difficulty categorizing features related to AI training and data input, indicating a need for clearer labeling and organization.

Overlap in Categories: Some features, such as Import Data, were placed in multiple categories by different users, suggesting a need for more distinct categorization.

Actionable Insights

Simplify Labeling: Simplify the labeling of features related to AI training to make them more intuitive.

Better Navigation: Group related functionalities together to match user expectations better.

Improve Onboarding: Use the insights to improve the onboarding process, making it easier for users to understand the different sections and features of the platform.

🧐 Usability Testing

Objective: To test the usability of the old and new interfaces and training module to ensure it meets user needs and is easy to use.

Methodology:

Participants: 24 users, including a mix of existing and new users from various industries (finance, healthcare, quick service restaurants, gaming, and automotive).

Test Scenarios: Participants were asked to complete specific tasks such as setting up a new chatbot, customizing responses, and deploying the chatbot.

Process: Observational testing with sessions recorded for detailed analysis. Each session lasted approximately 60-90 minutes.

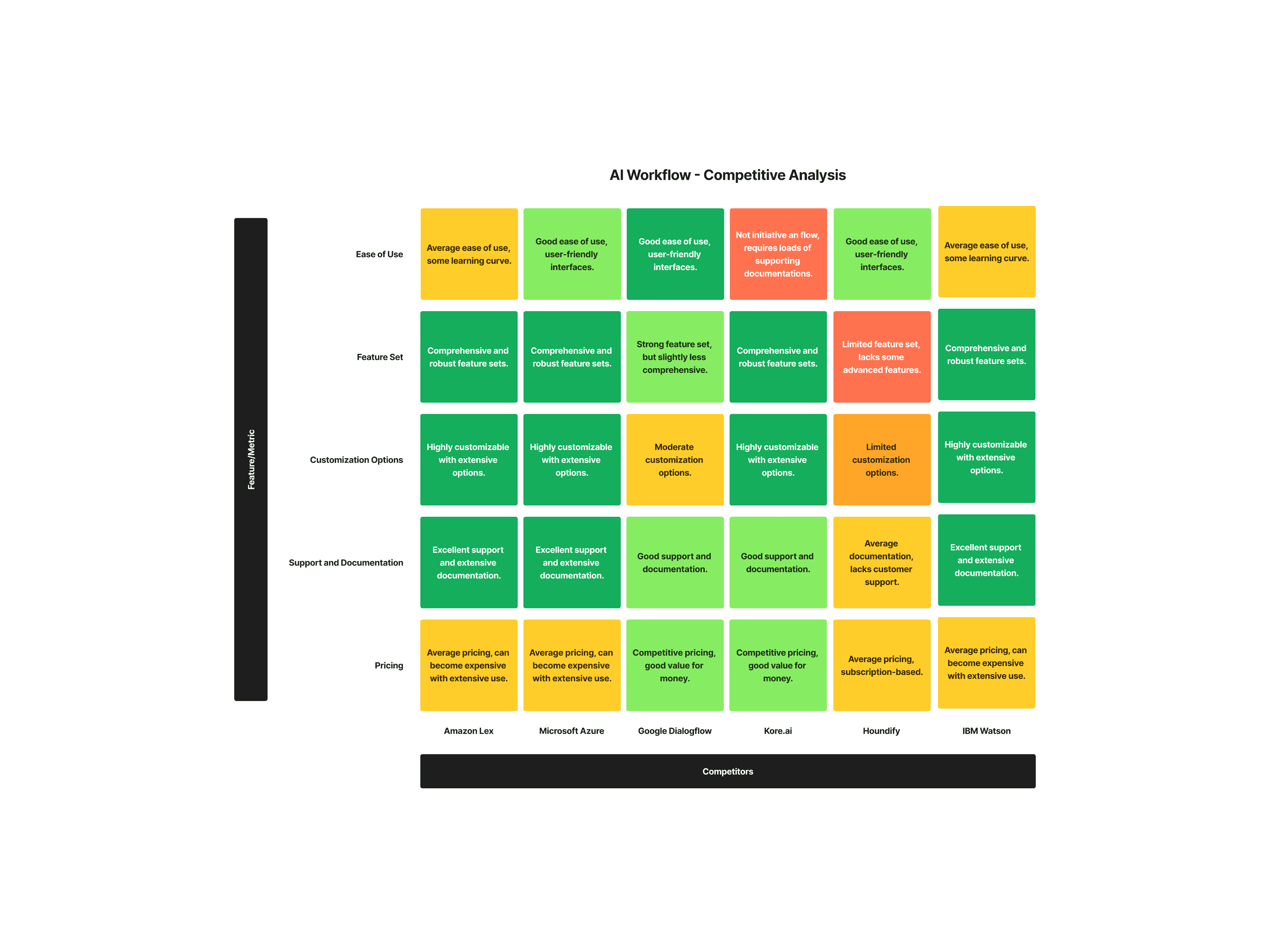

🔍 Competitive Analysis

Objective: To benchmark the platform against key competitors and identify opportunities for improvement.

Methodology:

Competitors Analyzed: Amazon Lex, Microsoft Azure, Google Dialogflow, Kore.ai, Houndify, IBM Watson.

Parameters for Comparison:

Ease of Use

Feature Set

Customization Options

Support and Documentation

Pricing

From Insights to Action: Charting the Path Forward

Analysis of Research Findings

Based on comprehensive UX research, including user interviews, card sorting tests, usability testing, and competitive analysis, we identified several key areas for improvement. These insights have guided the redesign of our platform, leading to the creation of a new site map, a guided tour feature, and more detailed and user-friendly documentation. The goal of these changes is to enhance the overall user experience, making the platform more intuitive, efficient, and accessible for users across various industries.

Key Action Items:

Redesign of the Site Map

Introduction of a Guided Tour Feature

Enhanced Documentation and Support Resources

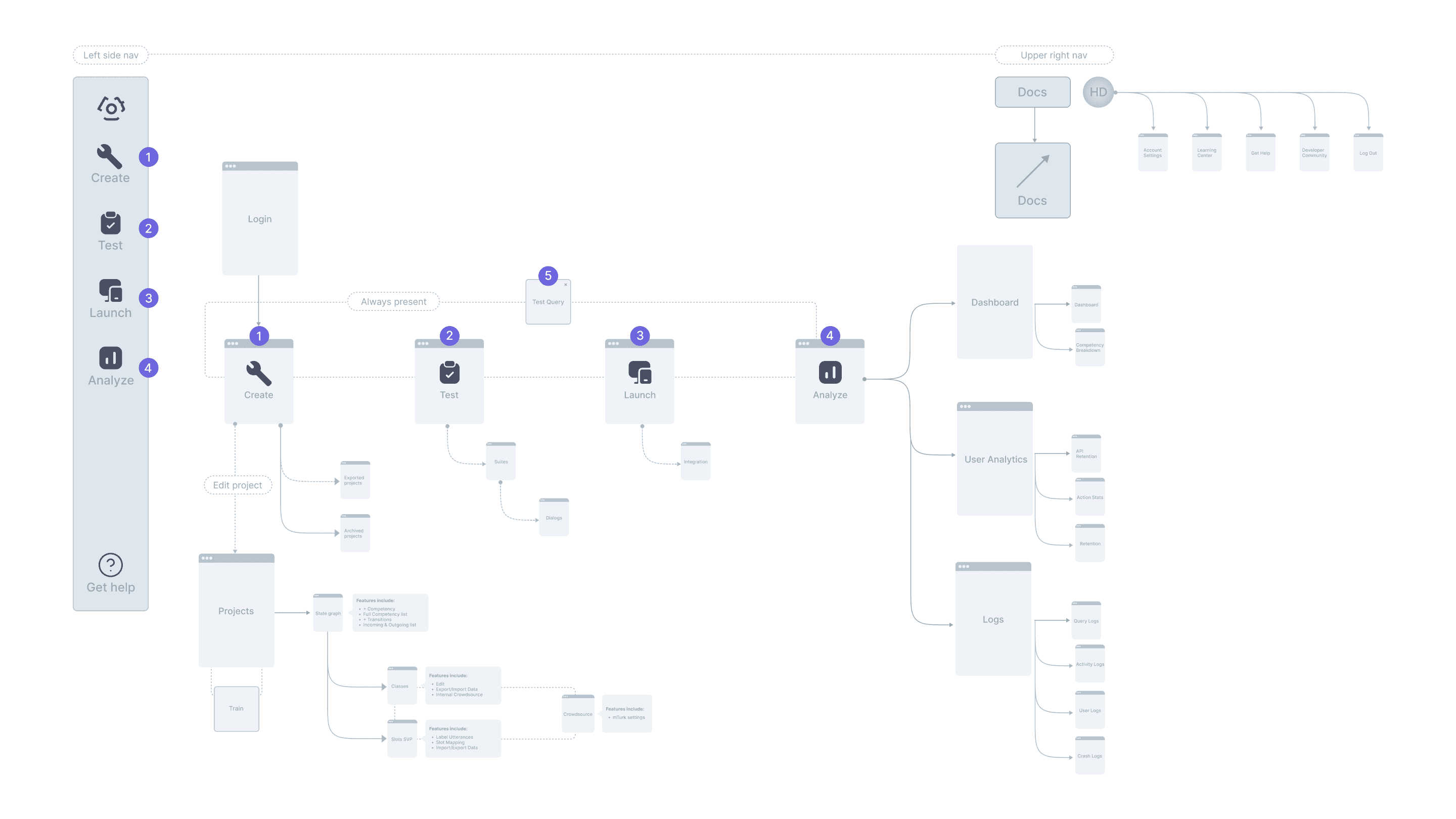

Redesign of the Site Map

Objective: To streamline navigation and improve the overall user experience by creating a more intuitive and logical structure.

Insights from Research:

User Interviews: Users struggled with finding specific features and navigating between different sections of the platform.

Card Sorting Tests: Users grouped features in a way that highlighted the need for clearer categorization and labeling.

Usability Testing: Participants often got lost or confused when trying to complete tasks due to unclear navigation paths.

Implementation:

Developed a new site map based on the natural grouping of features identified in the card sorting tests.

Simplified the navigation structure to reduce the number of clicks needed to access key functionalities.

Implemented a breadcrumb trail to help users keep track of their location within the platform.

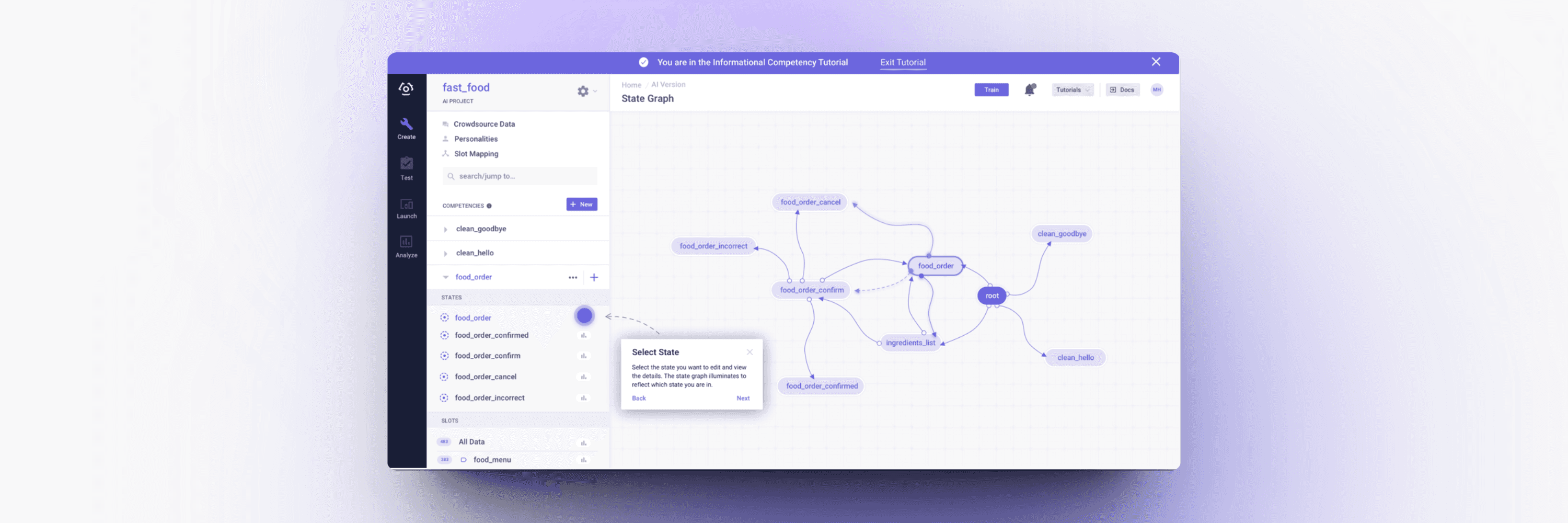

Introduction of a Guided Tour Feature

Objective: To assist new users in quickly understanding how to use the platform effectively through an interactive and informative onboarding process.

Insights from Research:

User Interviews: Many users expressed a need for better guidance and tutorials when first using the platform.

Usability Testing: Users appreciated clear instructions and step-by-step guidance when performing tasks.

Competitive Analysis: Competitors with strong onboarding experiences (e.g., Microsoft Azure, Google Dialogflow) had higher user satisfaction.

Implementation:

Worked with Designer and Engineering team to design and integrate an interactive guided tour that walks users through the essential features and workflows of the platform.

Included tooltips, contextual help, and step-by-step instructions to assist users in completing initial setup and customization tasks.

Provided the option to revisit the guided tour at any time through the help menu.

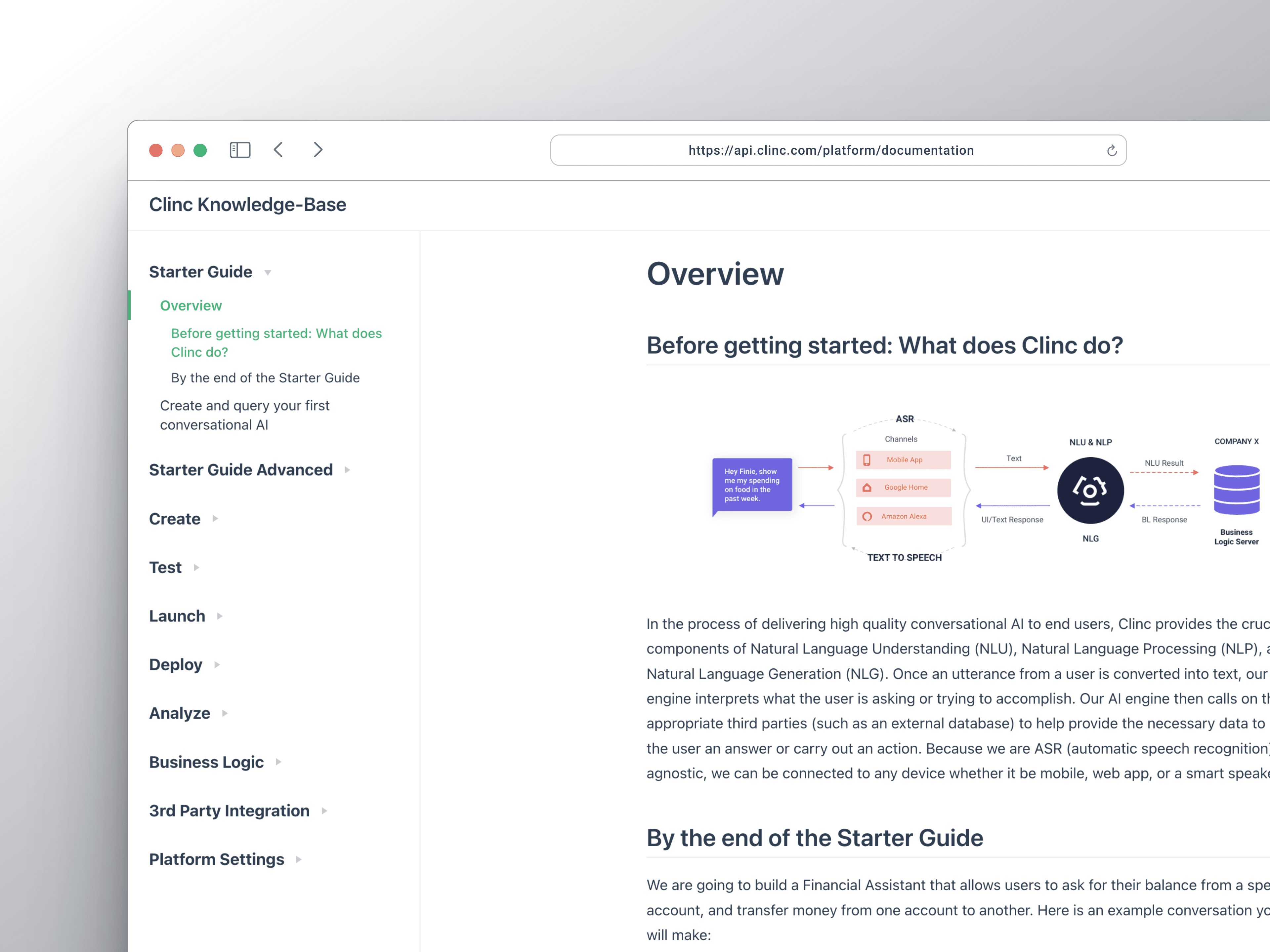

Enhanced Documentation and Support Resources

Objective: To provide comprehensive and accessible support resources that cater to both technical and non-technical users.

Insights from Research:

User Interviews: Users frequently mentioned the need for more detailed and easily accessible documentation.

Usability Testing: Participants struggled with terminology and understanding certain features due to insufficient documentation.

Competitive Analysis: Competitors with extensive and well-organized documentation (e.g., IBM Watson, Microsoft Azure) were preferred by users.

Implementation:

Expanded the documentation to include more detailed guides, FAQs, and troubleshooting sections.

Created video tutorials, gifs, and interactive help guides to cater to different learning preferences.

Improved the search functionality within the documentation to help users find information quickly and efficiently.

The Tangible Impact of Our Endeavors

By addressing the key pain points identified through our research, we have created a more user-centric platform that not only meets the needs of our diverse user base but also sets a new standard for usability and accessibility in the AI chatbot industry.

Quantitative Results

🚀 Improved User Engagement

We observed a 35% increase in user engagement, as indicated by time spent on the platform and interaction with key features.

The usage of our guided tour showed a 50% uptake by new users, suggesting its effectiveness in onboarding.

⏱️ Enhanced User Efficiency

Task completion times decreased by an average of 25%, indicating a more efficient user flow.

The number of support requests related to navigation dropped by 40%, reflecting the improved intuitiveness of the platform.

😊 Increased User Satisfaction

Post-implementation surveys indicated a 45% increase in overall user satisfaction.

Positive feedback on the updated documentation rose by 60%, with users appreciating the clarity and helpfulness of the resources.

Long-Term Impact

The changes implemented have not only improved the user experience but have also positioned the platform as a more competitive and attractive option in the market. We anticipated these improvements will contribute to increased user acquisition and retention, and a stronger brand reputation in the long term.

Reflecting Across the Journey

As we reach the conclusion of this case study, it's important to reflect on the journey we've undertaken. From the initial stages of user interviews to the final implementation of a more intuitive and user-friendly platform, each step has been a testament to the power of user-centered design and meticulous research.

Key Achievements

We successfully transformed complex user data into actionable insights, leading to a significant redesign of our site map and user flow.

Our efforts in developing an on-site guided tour and updating the documentation have markedly improved user engagement and satisfaction.

The quantitative results speak volumes, with notable increases in user efficiency, engagement, and overall satisfaction.